Building a Context-Aware Retriever in LangChain using Gemini and FAISS

Retrieval-Augmented Generation (RAG) systems are only as powerful as the retrievers that feed them information.

A good retriever doesn’t just find data it filters, compresses, and contextualizes it to help the language model focus on what really matters.

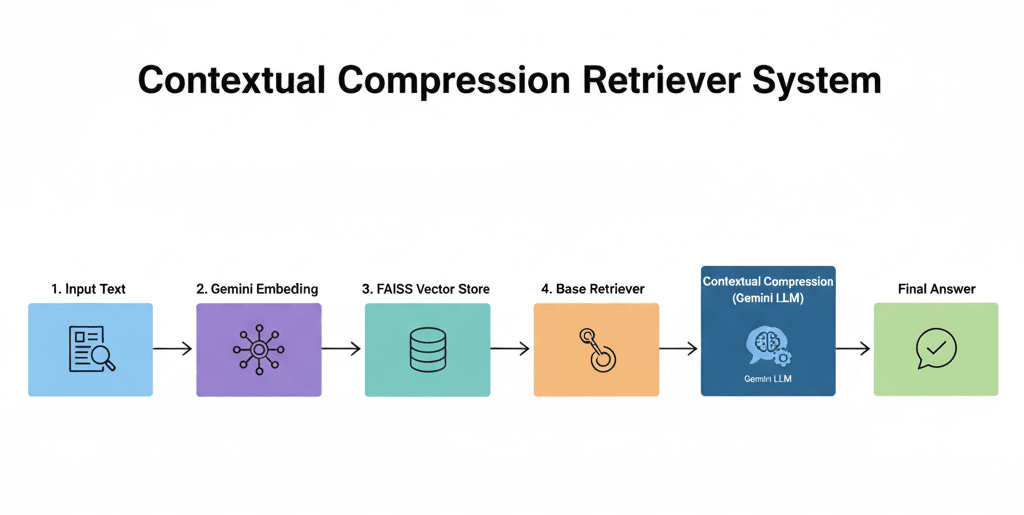

In this tutorial, you’ll learn how to build a Contextual Compression Retriever in LangChain, powered by Gemini embeddings, FAISS, and Gemini Flash as the compressor LLM.

This method ensures that your chatbot or AI assistant retrieves only the most relevant text segments cutting out noise while keeping context intact.

What You’ll Learn

By following this tutorial, you’ll understand:

How FAISS retrieves text chunks efficiently

How to use Gemini Embeddings to represent documents

How Contextual Compression works in LangChain

How to combine a retriever with Gemini Flash for better accuracy

How to integrate the retriever with an LLM for Q&A

1. What is Contextual Compression?

When you run a normal retriever (like FAISS or Chroma), it fetches the top-k most similar documents.

But often, those documents are long and contain irrelevant sentences.

For example:

If you ask “What is photosynthesis?” a document about “The Grand Canyon and photosynthesis” might appear, but only one line in it is relevant.

A Contextual Compression Retriever solves this by combining two steps:

Retrieve top documents using a standard retriever (FAISS in our case).

Compress them using a language model extracting only the relevant parts related to the query.

The result is a clean, focused, and context-rich set of documents ready for the LLM.

2. Setting Up Your Environment

You’ll need the following packages installed:

pip install langchain langchain-community langchain-google-genai faiss-cpu python-dotenvMake sure your .env file contains your Google API key:

GOOGLE_API_KEY=your_google_api_key_hereNote: If you’re pushing code to GitHub, include .env in your .gitignore to keep your API keys private

3. Importing the Required Libraries

We’ll use LangChain’s retriever components, FAISS for vector search, and Gemini for embeddings and LLM responses.

from langchain_community.vectorstores import FAISS

from langchain.retrievers.contextual_compression import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

from langchain_core.documents import Document

from langchain_google_genai import ChatGoogleGenerativeAI, GoogleGenerativeAIEmbeddings

from dotenv import load_dotenv

import os

load_dotenv()4. Creating Example Documents

We’ll create a few sample text documents that mix multiple topics only some of which are related to photosynthesis.

This will show how the retriever focuses on relevant text.

docs = [

Document(page_content=(

"""The Grand Canyon is one of the most visited natural wonders in the world.

Photosynthesis is the process by which green plants convert sunlight into energy.

Millions of tourists travel to see it every year. The rocks date back millions of years."""

), metadata={"source": "Doc1"}),

Document(page_content=(

"""In medieval Europe, castles were built primarily for defense.

The chlorophyll in plant cells captures sunlight during photosynthesis.

Knights wore armor made of metal. Siege weapons were often used to breach castle walls."""

), metadata={"source": "Doc2"}),

Document(page_content=(

"""Basketball was invented by Dr. James Naismith in the late 19th century.

It was originally played with a soccer ball and peach baskets. NBA is now a global league."""

), metadata={"source": "Doc3"}),

Document(page_content=(

"""The history of cinema began in the late 1800s. Silent films were the earliest form.

Thomas Edison was among the pioneers. Photosynthesis does not occur in animal cells.

Modern filmmaking involves complex CGI and sound design."""

), metadata={"source": "Doc4"})

]5. Creating the FAISS Vector Store

We’ll use Gemini embeddings to convert the text into numerical vectors, which FAISS can index and search efficiently.

embedding_model = GoogleGenerativeAIEmbeddings(

model="models/gemini-embedding-001",

google_api_key=os.getenv("GOOGLE_API_KEY")

)

vectorstore = FAISS.from_documents(docs, embedding_model)

base_retriever = vectorstore.as_retriever(search_kwargs={"k": 5})Here:

FAISS stores the document embeddings.

k=5 retrieves the top 5 most similar chunks for a query.

6. Creating the Compressor with Gemini Flash

The compressor uses a language model to extract only the parts of the retrieved text that directly answer your question.

llm = ChatGoogleGenerativeAI(

model="gemini-2.5-flash-lite",

api_key=os.getenv("GOOGLE_API_KEY")

)

compressor = LLMChainExtractor.from_llm(llm)

Gemini Flash is fast, efficient, and ideal for contextual summarization tasks like this.

7. Building the Contextual Compression Retriever

Now, we combine the FAISS retriever with our LLM-based compressor.

compression_retriever = ContextualCompressionRetriever(

base_retriever=base_retriever,

base_compressor=compressor

)8. Querying the Retriever

Let’s test it with a query about photosynthesis.

query = "What is photosynthesis?"

compressed_results = compression_retriever.invoke(query)

print("\n===== Contextually Compressed Results =====")

for i, doc in enumerate(compressed_results):

print(f"\n--- Result {i+1} ---")

print(doc.page_content)Example Output

--- Result 1 ---

Photosynthesis is the process by which green plants convert sunlight into energy.

--- Result 2 ---

The chlorophyll in plant cells captures sunlight during photosynthesis.

--- Result 3 ---

Photosynthesis does not occur in animal cells.

The retriever correctly extracts only the relevant text segments and ignores unrelated parts like castles or basketball.

9. Integrating with an LLM for Q&A

Finally, we’ll connect this retriever to a RetrievalQA chain, allowing the LLM to generate a final summarized answer.

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=compression_retriever,

chain_type="stuff"

)

response = qa_chain.invoke({"query": "Explain photosynthesis simply."})

print("\n===== LLM Response =====")

print(response["result"])

Example Output

Photosynthesis is the process through which plants use sunlight, water, and carbon dioxide to produce their own food and release oxygen.This shows how Gemini efficiently uses compressed, relevant context for an accurate and simple explanation.

10. How It All Works Together

Documents are embedded using Gemini embeddings.

FAISS retrieves the top relevant chunks.

Gemini Flash (LLM) compresses those chunks contextually.

The QA chain uses this clean, compressed text to generate the answer.

This architecture improves efficiency, accuracy, and interpretability a key advantage for scalable AI systems.

Conclusion

You’ve now built a context-aware retriever pipeline using LangChain, FAISS, and Gemini.

Unlike a traditional retriever, this setup doesn’t just fetch it understands and filters context, ensuring your language model sees only what’s truly relevant.

This same principle powers modern RAG-based chat systems and intelligent assistants.

Join the conversation

Sign in to share your thoughts and engage with other readers.

No comments yet

Be the first to share your thoughts!