What is Retrieval-Augmented Generation (RAG) and Why It Matters in AI

Introduction

Why Do Chatbots Sometimes “Make Things Up”?

Have you ever asked a chatbot a simple question like “What’s the latest iPhone model?” and it gave you an answer that sounded confident but turned out to be completely wrong? This problem is so common that researchers gave it a name: hallucination.

Hallucinations happen because most large language models (LLMs) generate answers based on the patterns they’ve learned from training data. They don’t actually “know” what’s true right now. So when they face a question outside their memory, they might invent a response that sounds correct but isn’t.

This is where Retrieval-Augmented Generation (RAG) comes in. Instead of letting the AI guess blindly, RAG allows the model to look up real information from trusted sources before giving an answer. Think of it like giving your AI a built-in “research assistant” that fetches relevant facts, so its responses are not only fluent but also accurate and grounded in reality.

What is RAG?

Q: So, what exactly is Retrieval-Augmented Generation (RAG)?

A: RAG is an AI framework that combines two powerful steps: retrieval (finding relevant information from an external source) and generation (using that information to create a natural, human-like response).

Q: Why is it called “augmented”?

A: Because instead of relying only on what the model already knows from training, we augment its ability with real, external knowledge. It’s like giving your AI access to an open-book exam rather than asking it to rely only on memory.

Q: Can you give a simple example?

A: Sure. Imagine you ask a chatbot: “What are the symptoms of the latest COVID-19 variant?”

A regular LLM might give you outdated or even incorrect answers.

A RAG-powered system, on the other hand, will retrieve the latest medical updates from trusted sources, then generate an answer using that data making the response more accurate and reliable.

How Does RAG Work?

Q: How does RAG actually work behind the scenes?

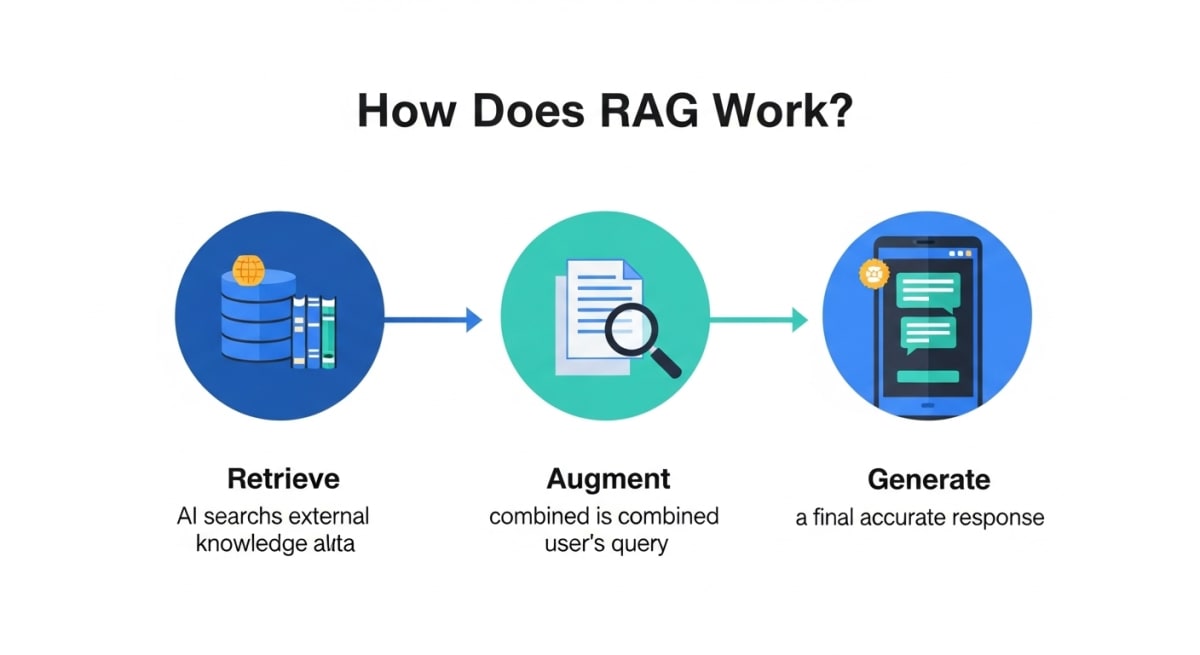

A: Think of RAG as a three-step process:

Retrieve – When you ask a question, the system searches external sources (like a database, documents, or the web) to find the most relevant information.

Analogy: It’s like a student flipping through textbooks to find the right page before answering.Augment – The retrieved information is added (or “augmented”) to your question so the AI has extra context.

Analogy: The student puts sticky notes in the textbook to highlight key details.Generate – Finally, the AI uses its language generation skills to craft a natural-sounding response, based on both its training and the fresh information it retrieved.

Analogy: The student then writes a clear answer in their own words.

Q: What makes this better than a normal chatbot?

A: A normal chatbot only uses what it remembers from training (which might be outdated). RAG, on the other hand, can pull in real, up-to-date knowledge every time you ask something making it far more reliable.

Why Do We Need RAG?

Q: Aren’t large language models already smart enough?

A: They are impressive, but they have one big limitation: they only know what they were trained on. If the training data is outdated or missing certain information, the model may guess and that’s how hallucinations happen.

Q: So what problem does RAG solve?

A: RAG solves the issue of accuracy and trust. By connecting the model to external knowledge sources, it ensures the AI doesn’t just generate text it grounds its answers in real data.

Q: Why is this so important?

A: Because in areas like healthcare, finance, law, and education, wrong answers aren’t just inconvenient they can be harmful or costly. Businesses and users need chatbots that are both smart and trustworthy.

Q: Can you give an example?

A: Imagine a doctor using an AI assistant to review the latest medical research. Without RAG, the model might rely on outdated training data from years ago. With RAG, it can pull up the most recent studies and guidelines leading to safer and more reliable recommendations.

Where is RAG Used?

Q: In what areas is RAG being used today?

A: RAG is already making an impact across many industries. Here are some of the most common use cases:

Search Engines – Instead of giving you a list of links, RAG can fetch documents and summarize them into a clear, direct answer.

Customer Support – RAG-powered chatbots can pull answers from a company’s knowledge base, FAQs, or manuals reducing the need for human agents.

Healthcare – Doctors and patients can get AI answers grounded in the latest medical research or treatment guidelines.

Legal Research – Lawyers can use RAG systems to search case laws and generate summaries saving hours of manual work.

Education – Students can ask questions and get answers drawn directly from their textbooks or study materials.

Coding Assistants – Developers can ask about a programming issue, and RAG-based tools retrieve relevant docs, then generate helpful explanations.

Q: What makes these examples powerful?

A: In each case, the AI isn’t just “talking smart” it’s pulling in real, verified information before responding. That makes it more useful, trustworthy, and practical.

What Are the Benefits of RAG?

Q: What’s the biggest advantage of RAG compared to normal AI models?

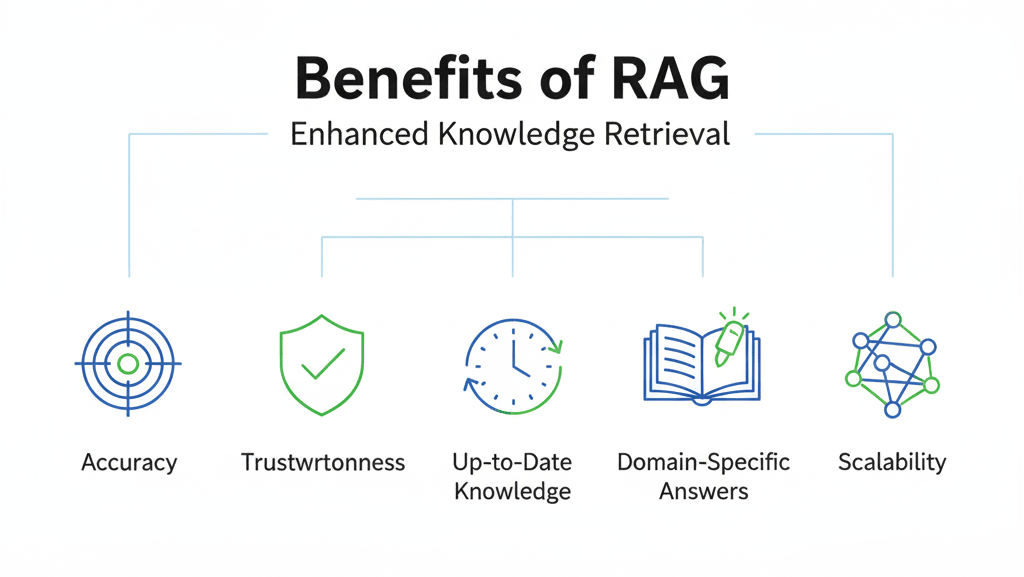

A: The biggest benefit is accuracy. RAG reduces the risk of hallucinations by grounding answers in real, retrieved information.

Q: Are there other benefits?

A: Yes, several:

Up-to-Date Knowledge – Unlike static models that freeze after training, RAG can fetch the latest information whenever needed.

Trustworthiness – Users are more likely to trust AI that can cite or point to sources.

Domain-Specific Support – RAG can be connected to private databases (like company manuals, medical research, or legal cases) for tailored, reliable answers.

Efficiency – It saves time by combining retrieval and summarization in one step no need to manually search and then interpret results.

Scalability – Businesses can train one RAG system that adapts to multiple domains by simply plugging in new knowledge sources.

Q: Why does this matter for Agentic AI?

A: Because truly “agentic” AI that can act with autonomy needs a reliable knowledge base to make good decisions. RAG gives it that foundation.

What Are the Challenges of RAG?

Q: If RAG is so powerful, what’s the catch?

A: Like any technology, RAG has its challenges. Here are the main ones:

Latency – Since RAG needs to search external sources before answering, it can sometimes feel slower than a regular chatbot.

Cost – Fetching and processing large amounts of data can increase computing costs. For businesses running at scale, this adds up quickly.

Data Quality – RAG is only as good as the data it retrieves. If the external source is biased, incomplete, or unreliable, the AI’s answer may still be flawed.

Complexity – Building a RAG system requires both retrieval infrastructure (databases, search engines) and generation models making it harder to set up than a simple chatbot.

Security & Privacy – If the AI retrieves from sensitive or private documents, ensuring proper access control and data protection is critical.

Q: So does this mean RAG isn’t worth it?

A: Not at all. These challenges can be managed with the right design like caching, optimized search systems, and secure data pipelines. The benefits usually outweigh the drawbacks.

What Is the Future of RAG in Agentic AI?

Q: How does RAG fit into the bigger picture of Agentic AI?

A: Agentic AI refers to AI systems that can act with more autonomy planning tasks, making decisions, and solving problems. For such agents to be truly effective, they need reliable, up-to-date knowledge. RAG provides that foundation.

Q: What changes can we expect in the future?

A: Several exciting directions are emerging:

Multimodal RAG – Not just text, but retrieval of images, videos, code, and audio to support richer answers.

Personalized RAG – AI agents that retrieve not only general knowledge but also personalized information (like your calendar, preferences, or past chats) in a secure way.

Real-Time RAG – Faster retrieval pipelines that make it possible to answer with up-to-the-second accuracy, like live news or stock updates.

Domain-Specific Agents – Lawyers, doctors, engineers, and researchers will use RAG-powered AI agents tailored to their fields.

Human-AI Collaboration – RAG will allow AI agents to explain where their answers come from, making it easier for humans to trust and verify them.

Q: Why does this matter for the future of AI?

A: Because without reliable knowledge, even the smartest AI agent can make poor decisions. With RAG, we move closer to AI systems that are not only intelligent but also responsible, trustworthy, and truly useful in the real world.

Conclusion: Why RAG Matters

Chatbots and AI assistants are getting smarter every day, but without reliable information, even the best models can stumble. Retrieval-Augmented Generation (RAG) bridges that gap. By combining retrieval with generation, it creates AI systems that are not only fluent but also grounded in real knowledge.

For businesses, this means more trustworthy customer service. For professionals, it means better tools for research, law, healthcare, and education. And for Agentic AI the next stage of autonomous, decision-making systems RAG provides the essential foundation for safe, accurate, and responsible intelligence.

Join the conversation

Sign in to share your thoughts and engage with other readers.

No comments yet

Be the first to share your thoughts!