Trust in Agentic AI: The Foundation of Safe and Scalable Autonomy

An Educational Deep-Dive for Students, Developers, and Future AI Leaders

Agentic AI autonomous systems capable of reasoning, taking independent actions, and collaborating with humans or other AI agents represents one of the most transformative shifts in modern technology. Unlike traditional AI, which only provides predictions or recommendations, Agentic AI can perform tasks, make decisions, evaluate risks, and interact autonomously with digital systems.

But with this level of autonomy comes a crucial question:

How can we trust an AI system that acts on its own?

Trust in Agentic AI is not a single feature that can simply be switched on. It is a multi-layered structure built on transparency, safety, consistency, and accountability. Without trust, organizations hesitate to adopt Agentic AI, users may resist relying on its decisions, and regulators scrutinize every automated action.

This article provides an educational deep-dive into the core dimensions of trust in Agentic AI, why trust is essential, the risks of trust breakdowns, and the engineering principles that help build safe, ethical, and reliable autonomous systems guiding students, developers, and future AI leaders in navigating the emerging world of Agentic AI.

Why Trust Is the Cornerstone of Agentic AI

Every industry insurance, healthcare, banking, logistics, retail runs on trust.

People expect:

Services to be delivered reliably

Businesses to operate ethically

Information to stay secure

Decisions to be made fairly

When autonomous AI agents take action without human supervision, this expectation becomes even stronger.

Why trust matters more with Agentic AI:

Agents don’t just advise they act

Agents can trigger workflows, approve requests, or interact with customers

Agents can collaborate with multiple other agents to solve complex tasks

Their decisions often have real-world financial, regulatory, or safety consequences

Imagine an AI underwriting agent approving or rejecting a multimillion-dollar insurance policy.

Would you rely on it without understanding how it made its decision?

This is why trust is not optional it is the core requirement for adoption.

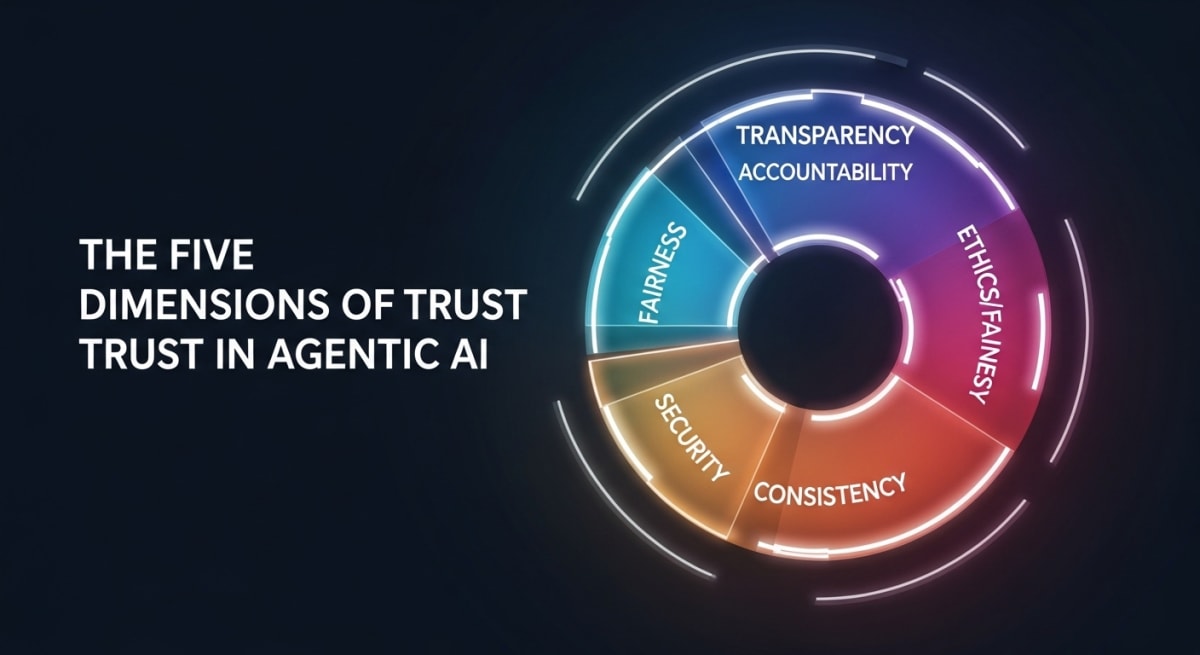

The Five Dimensions of Trust in Agentic AI

To build systems people can rely on, Agentic AI must demonstrate five essential qualities.

1. Transparency Understanding How Decisions Are Made

A trustworthy AI system cannot operate like a “black box.”

Users must be able to see:

what data was used

which steps the agent followed

which risks were detected

how the final decision was calculated

For example:

Instead of simply returning “Rejected,” a transparent underwriting agent should explain:

“Application declined due to high flood exposure and missing fire safety documentation.”

Transparency builds confidence, supports audits, and allows humans to understand and validate decisions.

2. Accountability Knowing Who Is Responsible

In a multi-agent ecosystem, dozens of agents may collaborate. If something goes wrong, organisations must know:

which agent took the action

what authority it had

what data it accessed

which rules it applied

Clear accountability prevents confusion when decisions are challenged and ensures that responsibility is never lost between agents.

3. Security Protecting Data and Access

Because agents interact with multiple systems, the attack surface expands.

Zero-trust principles become essential:

No agent should have unnecessary permissions

Every request must verify identity and intent

Sensitive information must be protected

Example:

An underwriting agent should not automatically gain access to full customer financial history unless required by context and approved.

4. Consistency Delivering Fair and Predictable Outcomes

If two similar cases produce significantly different results, confidence collapses.

Trust requires:

consistent decision rules

stable model performance

repeatable outputs for similar inputs

Inconsistent behaviour not only frustrates users but also undermines fairness and regulatory compliance.

5. Ethics & Fairness Preventing Bias and Protecting People

Agentic AI must uphold societal values:

no discrimination

no unfair penalisation

no hidden biases in data

respect for privacy and personal rights

For example:

An AI hiring agent must not inherit historical biases against certain groups.

Ethical design ensures that AI supports not harms human decision-making.

What Happens When Trust Breaks Down?

Lack of trust leads to serious consequences:

Opaque decisions → users and regulators cannot explain results

Accountability gaps → unclear ownership when errors occur

Security breaches → compromised agents gaining access

Unpredictable outcomes → inconsistent decisions eroding confidence

Reputation damage → loss of customer trust and market credibility

Without trust, even the most advanced Agentic AI becomes unusable.

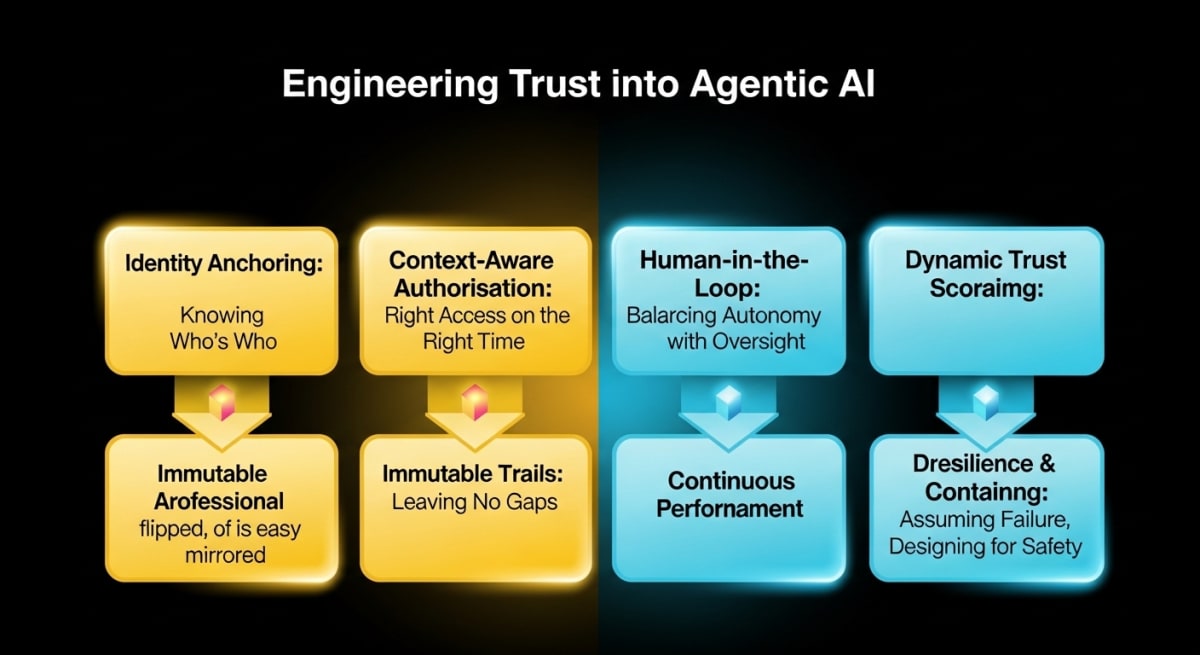

Engineering Trust: The Six Principles for Building Trusted Agentic AI

To build reliable, safe, and accountable autonomous systems, engineers must embed trust into the architecture.

Below are the six foundational principles.

1. Identity Anchoring Every Agent Must Have a Verifiable Identity

Just like employees have secure logins, AI agents must also have:

unique identities

signed certificates

traceable origin

This prevents impersonation and enables accurate auditing.

2. Context-Aware Authorisation Access Only What Is Needed

Agents should never get broad permissions.

Instead, access must depend on:

role

task

risk level

context

Example:

A pricing agent may access building details but not personal financial history unless explicitly required.

3. Immutable Audit Trails Recording Every Action Permanently

Every agent action must be:

logged

time-stamped

tamper-proof

replayable

These logs help with:

troubleshooting

regulatory audits

verifying decisions

tracking errors across agent networks

4. Human-in-the-Loop Combining Autonomy with Human Oversight

Not all decisions should be fully automated.

A safe model includes levels of autonomy, such as:

full automation for low-risk tasks

partial automation with human review

mandatory human approval for high-value decisions

This preserves safety while still gaining efficiency.

5. Dynamic Trust Scoring Evaluating Agents Continuously

Agents should earn autonomy based on:

accuracy

reliability

fairness

compliance behaviour

If an agent underperforms, its trust score decreases and human verification increases.

If its performance improves, it can handle more cases.

6. Resilience & Containment Designing for Failure

Engineers must assume that failures will occur.

Systems should be able to:

isolate a faulty agent

revoke its permissions instantly

prevent a single failure from affecting the whole system

continue operating with other agents

This ensures stability even when problems arise.

The Business Value of Trust in Agentic AI

Trust is not just a safety mechanism; it is a competitive advantage.

When organisations adopt trustworthy Agentic AI, they experience:

faster decision-making

higher customer satisfaction

smoother regulatory approval

reduced risk

more consistent outcomes

better user adoption

Trust reduces friction across the entire ecosystem.

Conclusion: Trust as the First Pillar of Agentic AI

Agentic AI promises transformative impact but only when built on a foundation of trust.

Without trust, organisations cannot rely on autonomous decisions, customers lose confidence, and regulators demand justification for every step.

With trust, however:

autonomy becomes scalable

decisions become faster and fairer

AI becomes a strategic differentiator

businesses gain efficiency while preserving safety

The next essential layer after trust is observability the ability to see, measure, and understand real-time AI behaviour.

Trust tells us what should happen.

Observability reveals what is actually happening.

Together, they create the foundation for safe, reliable, and future-ready Agentic AI.

Join the conversation

Sign in to share your thoughts and engage with other readers.

No comments yet

Be the first to share your thoughts!