How Agentic AI Is Redefining the Role of Testers: A Deep Educational Guide

Software testing has always evolved alongside the technologies it protects. From manual testing to early automation frameworks and eventually full CI/CD pipelines, every era has pushed testers to expand their skills and rethink their responsibilities. Today, the industry stands at another major turning point the rise of Agentic AI.

Unlike traditional automation, which executes predefined steps, Agentic AI introduces a fundamentally new capability: autonomous intelligence. These AI systems can observe applications, interpret high-level goals, plan tasks, adapt to unexpected changes, and execute actions without human-written step-by-step instructions. For testers, this doesn’t just shift the testing landscape it redesigns it from the ground up.

This educational guide will break down what Agentic AI really is, how it transforms QA workflows, what opportunities it creates, and the new responsibilities testers must embrace. Everything here is grounded in capabilities already emerging in AI-powered test engineering, without exaggeration or speculative claims.

1. Understanding Agentic AI: The Next Frontier of Testing

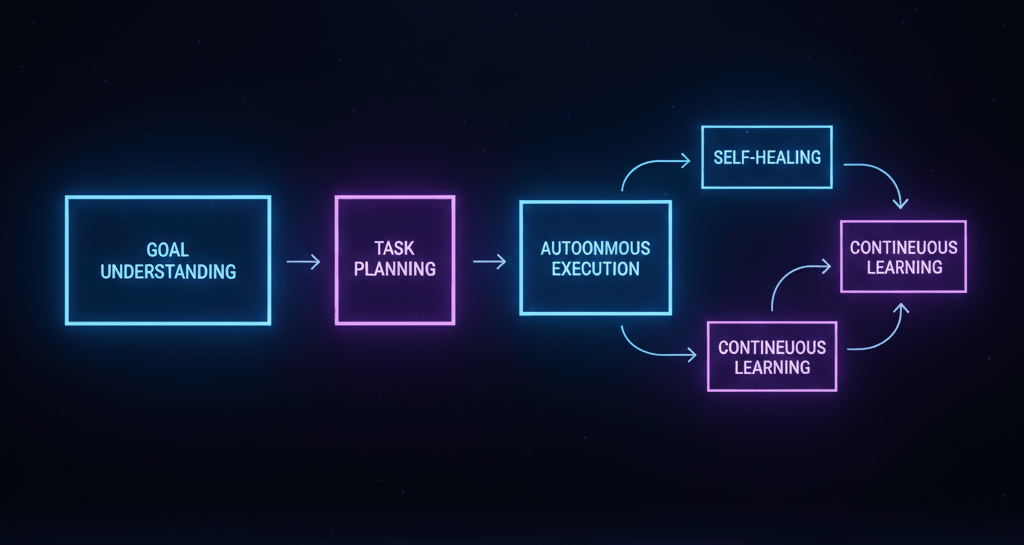

Agentic AI refers to AI systems capable of autonomous decision-making. Instead of simply following scripts or reacting to predefined triggers, agentic models can:

Interpret a goal (e.g., “test this checkout flow”)

Break it into logical steps

Examine the system under test

Decide which test paths make sense

Adapt when something unexpected happens

Learn from failures or application changes

This behavior mimics how human testers reason:

“What should happen here?”

“What scenarios could break this feature?”

“What if the UI changes?”

“What path should I explore next?”

Traditional testing automation is static. Agentic AI is adaptive.

Why This Matters for Testers

Agentic AI doesn’t try to replace human testers. Instead, it handles the labor-heavy, repetitive, or reactive tasks allowing testers to move into more strategic, analytical, and creative roles.

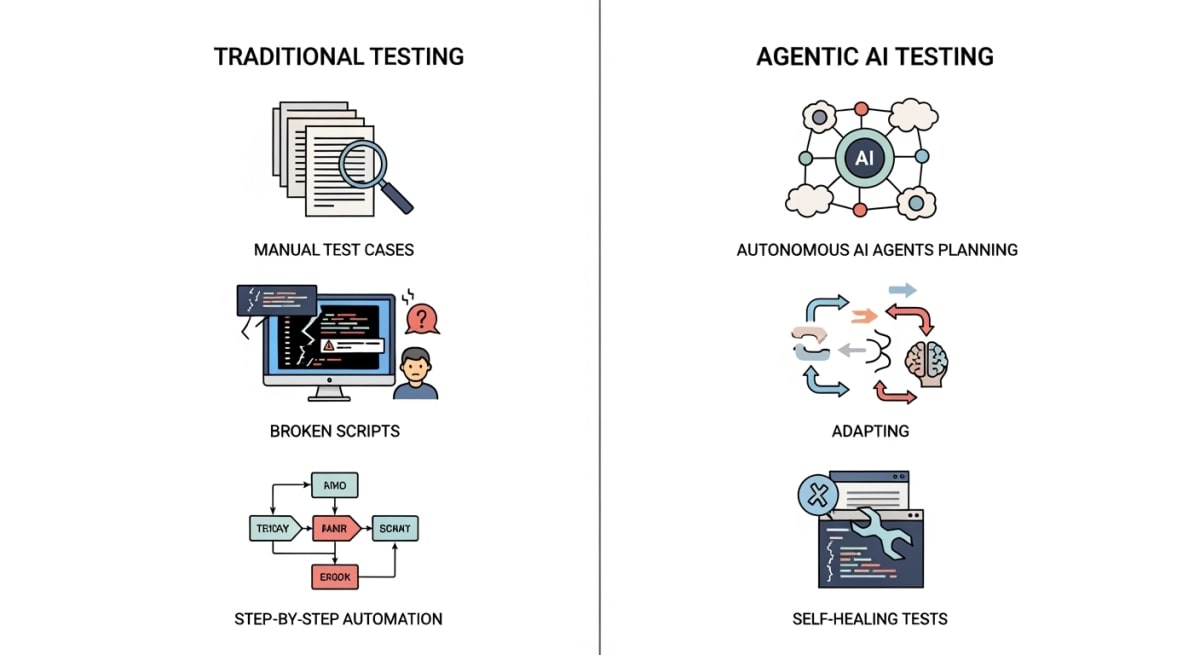

2. Traditional Automation vs. Agentic AI: What’s the Real Difference?

To understand the shift, let’s compare how both approaches behave in real testing environments.

Traditional Automation

Executes pre-written scripts

Fails when UI changes

Cannot make decisions

Needs constant maintenance

Depends heavily on test engineers to “tell it what to do”

Agentic AI

Understands goals instead of scripts

Adjusts when elements change

Makes decisions dynamically (e.g., handle pop-ups, alternative paths)

Learns from past runs

Generates new tests from documentation, UI, and user behavior

The difference is thinking vs. following commands.

3. How Agentic AI Is Transforming Test Automation

The impact of Agentic AI on test engineering can already be seen in five major areas.

a) Autonomous Test Case Generation

One of the biggest time drains for testers is reading requirements, understanding business logic, and converting them into actionable test cases.

Agentic AI can:

Analyze requirement documents

Study Figma or UI design files

Inspect user flows

Recognize input fields, buttons, and workflows

Automatically create relevant test cases

This mirrors how a human tester asks:

“What is the user expected to do here, and what needs to be validated?”

Instead of generating thousands of flaky tests, agentic systems focus on context-aware, meaningful tests.

b) Intelligent Test Maintenance (Self-Healing)

Every tester knows the pain of:

Broken locators

Changing UI layouts

Modified workflows

Updated component names

Deleted elements

Traditional automation simply breaks.

Agentic AI, however, can:

Detect element changes

Map new UI components

Repair test steps automatically

Suggest updated scripts when required

Re-run tests to validate the fix

This reduces hours of script maintenance into minutes of automated correction.

c) Dynamic Decision-Making During Test Execution

This is where Agentic AI truly becomes “agentic.”

Instead of failing when an unexpected scenario appears, it can:

Recognize unplanned pop-ups

Navigate around unstable UI elements

Choose alternate paths

Reattempt blocked actions

Skip irrelevant screens and continue testing

This is very similar to how an experienced tester behaves:

“If this error appears, I’ll close it and continue checking the main flow.”

AI agents don’t panic they adapt.

d) Improved Test Coverage Through Intelligent Exploration

Testers often operate under time pressure:

Limited regression cycles

Short sprints

Multiple features shipping at once

Agentic AI agents can perform autonomous exploratory testing, identifying:

Edge cases

Misaligned UI states

Inconsistent responses

Rare failures

Non-documented behaviors

They can map user flows the team didn’t even know existed.

This leads to:

Fewer escaped defects

Higher product quality

More stable releases

e) Support for Shift-Left and Shift-Right Testing

Modern testing is no longer about “testing at the end.”

Agentic AI integrates across the SDLC:

Shift-Left

Analyzes pull requests

Suggests test scenarios early

Runs tests in CI pipelines

Highlights areas of risk before merging

Shift-Right

Observes production logs

Extracts real user journeys

Generates test cases based on actual usage patterns

Detects anomalies from live interactions

This makes testing continuous, contextual, and data-driven.

4. How Agentic AI Changes the Tester’s Daily Workflow

Agentic AI changes not just the tools testers use but the nature of their work.

Here’s what a typical tester often handles today:

Reading requirements

Writing manual test cases

Updating scripts

Running regression suites

Logging bugs

Re-testing fixes

Maintaining automation frameworks

Now compare that to a future workflow with Agentic AI:

Tasks Handled by AI

Test case creation

Script maintenance

Regression execution

Environment setup

Real-time adaptation

Documentation updates

Exploratory testing at scale

Tasks Handled by Testers

Understanding business logic

Prioritizing what matters

Designing test strategies

Validating AI decisions

Exploring creative scenarios

Assessing user experience

Communicating risks

The Benefits

Lower manual workload

Reduced tester burnout

Higher test coverage

Faster feedback loops

More intelligent discussions with developers

More time to focus on quality, not clicks

Agentic AI doesn’t replace testers it elevates them.

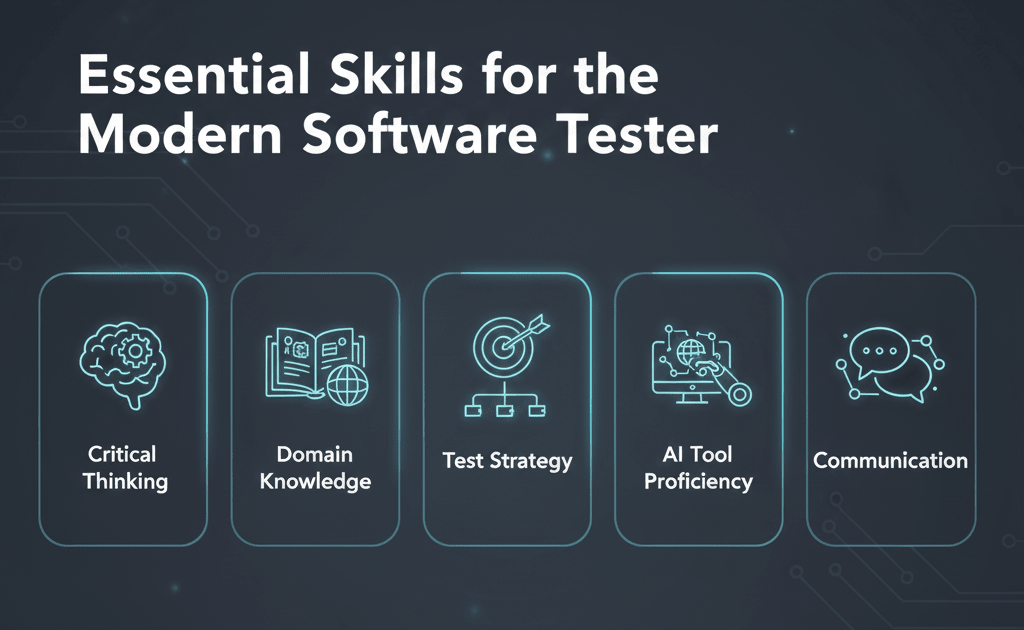

5. New Skills Testers Need in the Age of Agentic AI

As AI takes over repetitive work, testers evolve into AI-guided quality engineers.

Here are the skills that will matter most:

a) Deep Domain Understanding

AI can analyze patterns, but it doesn’t know:

Business priorities

Regulatory constraints

Real customer expectations

Critical vs. low-risk scenarios

Testers who understand the product deeply become invaluable.

b) Test Strategy Design

Agentic AI can execute, but it still needs direction.

Testers must decide:

What features matter most

Which risks carry the highest impact

What level of coverage is acceptable

Which areas need human exploration

AI needs a strategy to execute against.

c) Critical Thinking and Human Judgment

AI may misinterpret certain scenarios. Testers must:

Evaluate whether AI-generated tests make sense

Validate that AI’s decisions are logical

Distinguish between real issues and false positives

Human intuition remains irreplaceable.

d) AI Tool Awareness

Testers don’t need to become ML experts, but they must:

Understand how agentic testing tools work

Know how to configure goals and constraints

Supervise AI performance

Interpret AI-generated reports

This is similar to how testers once had to learn Selenium or Cypress.

e) Strong Communication Skills

AI can generate logs, screenshots, or flow diagrams, but testers must communicate:

Risks

Insights

Testing outcomes

Release confidence levels

Cross-functional clarity becomes more important than ever.

6. The Limitations of Agentic AI (and Why Testers Still Matter)

Agentic AI is powerful but it is not perfect. It has real constraints testers must be aware of.

a) Limited Business Context

AI may not understand:

Legal constraints

Business priorities

UX expectations

Cultural nuances

It can operate the system, but it doesn’t “understand” the why.

b) Decision Transparency

AI can make choices that seem correct but are not fully explainable.

Testers must ensure the AI’s decisions align with the test strategy.

c) Over-reliance on AI

There is a risk of teams becoming passive:

Letting AI generate all tests

Ignoring exploratory testing

Assuming AI “understands everything”

Human involvement remains essential.

d) Potential for Misinterpretation

AI may incorrectly categorize:

Expected vs. unexpected behaviors

True vs. false failures

Critical vs. trivial issues

Human testers anchor the process with real-world understanding.

7. The Future of Testing: A Collaboration Between Humans and AI

Agentic AI is not a replacement for testers it is a co-tester.

A tireless assistant.

A force multiplier.

Testers who embrace it will:

Spend less time on busywork

Gain more time for creativity

Make more strategic contributions

Influence product quality more deeply

The testers who thrive will be:

Curious

Adaptive

Analytical

Business-focused

AI-aware

As AI handles the execution, testers become quality leaders, strategists, and product thinkers.

Final Thoughts

Agentic AI represents one of the biggest shifts in the history of software testing. It doesn’t just automate it thinks, plans, adapts, and learns. This transformation opens the door for testers to move away from repetitive tasks and toward high-level quality engineering work.

The future of testing is not about choosing between humans or AI.

The future is humans + AI, working together.

Testers who embrace agentic systems will lead the next era of software quality an era defined by speed, intelligence, adaptability, and continuous improvement.

Join the conversation

Sign in to share your thoughts and engage with other readers.

No comments yet

Be the first to share your thoughts!